quantum research

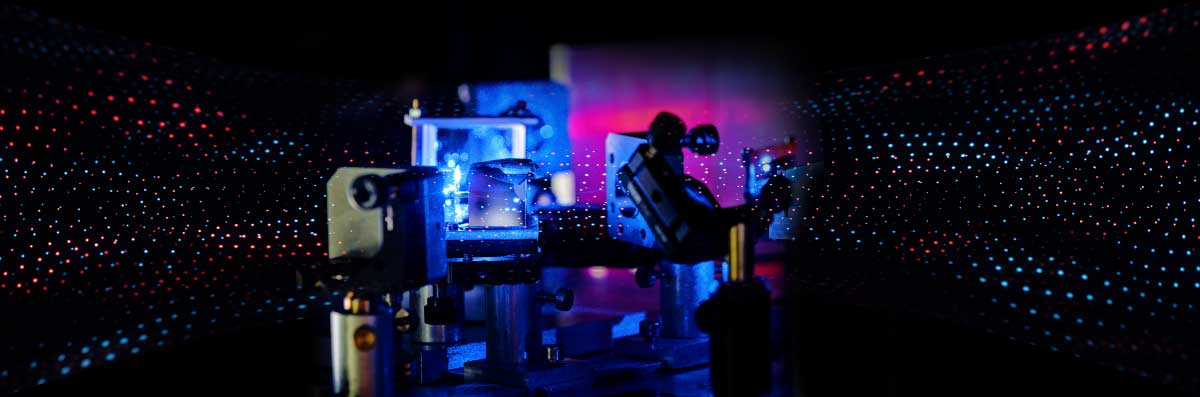

Quantum phenomena such as superposition, uncertainty, and entanglement are studied in quantum research with the goal that they can be safely fabricated when needed and made useful in various disciplines.

Quantum key distribution (QKD) for example enables the tap-proof encryption of data by exploiting the quantum properties of light. Im quantum communication transmission of encrypted data single-photon sources (SPS) can be used for optimal performance. Our fast TDCs facilitate the development of low-noise single-photon counting receiver modules which convert single-photon detection events into streams of time-tags - synchronized to the excitation-laser source.

Quantum sensing, on the other hand, is based on detecting variations in microgravity using the principles of quantum physics. Quantum sensing uses various properties of quantum mechanics of photonic systems or solid-state systems - such as quantum entanglement, quantum interference, and quantum state reduction to overcome the current limitations of sensing technology and the Heisenberg uncertainty principle.

In quantum computing, the above collective properties of quantum states are used to perform computations. Efforts to build a physical quantum computer are currently focused on technologies such as transmons, ion traps, and topological quantum computers that aim to generate high-quality qubits. The assumption is that quantum computers are capable of solving certain computational problems so quickly that no classical computer could keep up in a reasonable amount of time (quantum supremacy).

A typical example for the use of cronologic TDCs in this area is the research of Wolfgang Löffler:

Wolfgang Löffler's project in Leiden, Holland, finds answers to current questions in quantum research.

A single photon is now considered to be one of the most suitable candidates for information transmission in future information networks. The photonic quantum technologies are considered to be unique because decoherence hardly plays a role anymore, i.e. when photon losses are minimized. The photon also seems to be the only suitable carrier for data transmission using qubits, as transmission through glass fibers is possible here. In Wolfgang Löffler's laboratory, the laws of quantum physics, such as the photon blockade effect (interaction of light with individual semiconductor quantum dots in optical cavities) are used to generate an ordered stream of individual photons. One of his team's projects focuses on developing a single-photon light source that is placed directly in a light-conducting glass fiber. Applications for such a light source can be found in abundance, e.g. as photonic quantum gates, quantum repeaters, and short-term quantum memories. The emerging areas of modern science, quantum sensors, quantum key distribution, and of course data transmission over long distances with fiber-optic networks are just waiting for such building blocks for quantum cryptographic networks. Logical operations in the nano range on a quantum photonically integrated semiconductor chip are also possible today and form a possible basic building block for the development of quantum computers.

What is the character of this light?

Today, physicists generally regard laser light as classic light - since it is comparatively easy to produce in the lab. However, if you look at it more closely, laser light consists of a large mixture of packets, each with a certain number of photons. If you wanted to determine the number of photons in the laser light, then this would follow the Poisson distribution and would consequently be “maximally random”. This is one of the reasons why it is difficult to convert such light into individual photons. It would therefore not be possible to build a quantum computer with laser light. In Wolfgang Löffler's research group, however, what is known as quantum light is generated. This is a "new" form of light that has only been able to be produced in high quality for a few years - and only with the help of complex laboratory setups. As already mentioned above, quantum light is characterized by the fact that it consists of individually generated photons that are available at a gigahertz rate. The light intensity that can be achieved is so intense that it can even be recorded with an ordinary handheld camera.

Even if our light consists of photons, the photons themselves show fascinatingly different properties. Wolfgang Löffler's team investigates the distribution of photon states in quantum light, which differ in their frequency, polarization, and bandwidth. The key to success when it comes to secure data transmission is precisely this ability to differentiate between the photons. To determine the character of his light, Löffler primarily observes the emission rate, i.e. the number of photons that can be detected in a certain, extremely small time window. The measurement is carried out with single-photon detectors, which generate TTL pulses that are saved as a click. The raw photon counts are recorded - a measurement in which approx. 5 million time tags are recorded per second. The amount of data that arises is therefore considerable. The cronologic TDC models HPTDC or TimeTagger4 are used for the measurement.

How exactly is quantum light generated?

To generate single-photon states, an electron is typically excited in a semiconductor quantum dot, i.e. in an artificial atom with dimensions of only a few nanometers, by means of light. The electron can have two energy levels. Within the quantum dot, every time it relaxes to the lower energy level, a single photon is emitted. During this process, according to the Pauli Exclusion Principle, only a single stimulus can occur. Thus the relaxing electron sends out exactly one photon.

The said quantum dot is located in an optical microcavity, the walls of which reflect light, which is also used to efficiently send photons from the quantum dot into glass fiber. The quantum dot can be energetically controlled in or out of resonance with the optical cavity with an electric field. If the quantum dot is not in resonance, laser light is transmitted, and the light "tunnels" through the mirror. If the quantum dot is now shifted in resonance, the quantum dot scatters photons from the laser light, but only one after the other, and also changes their polarization. This can be used to separate the individual photons from the laser light. A variation of this is the “unconventional photon blockade” effect, in which the quantum interference of different polarizations of the photons is cleverly exploited to generate a stream of individual photons.

With this structure, it is possible for scientists to generate individual photons at a rate of around one billion per second. The interaction of the light within this optical resonator is now so good that the absorption of a single photon in the quantum dot is usually registered. And the emission of a single photon can also be captured in the subsequent optical phase with a very high degree of probability. Löffler's method of generating quantum light apparently scales much better than the classic method of spontaneous parametric down-conversion (SPDC).

On the current state of research

The researchers recently used the single photons just described to create artificial light states that are very similar to those of coherent laser light and are therefore relatively insensitive to interference in the transmission. The coherent light is composed of its individual components. However, there is a small but subtle difference to classic light: Artificial quantum light shows a certain quantum entanglement between photons at different times, so-called photonic cluster states. The correlation of the photons in a linear cluster state is so strong that quantum information can be transmitted with it. Currently, around three to four quanta are entangled with one another (e.g. with the help of their electron spin). The goal of Löffler's research is now to develop a scalable, deterministic source of 20 or more entangled photons in order to create a powerful resource for photonic quantum computing.

At this point, we would like to give visitors to our website who are interested in the possible applications for our products a little insight. We ourselves are primarily concerned with the requirements of our customers with regard to data acquisition and are neither doing this kind of quantum research nor do we build quantum computers. We just enjoy learning from our customers and sharing what we have learned with those who are interested in our work. The following contents are therefore not scientific treatises, but also reflect subjective impressions.